Generative AI adoption requires company transparency and accountability, and consumer education

Generative AI is a technology that can use written or spoken language inputs as prompts to generate something entirely new. A well-known version of this technology in action is ChatGPT, a large language model chatbot developed by OpenAI that has been the subject of a number of news stories. Much of the public discourse has focused on its potential to revolutionize the way we work and live.

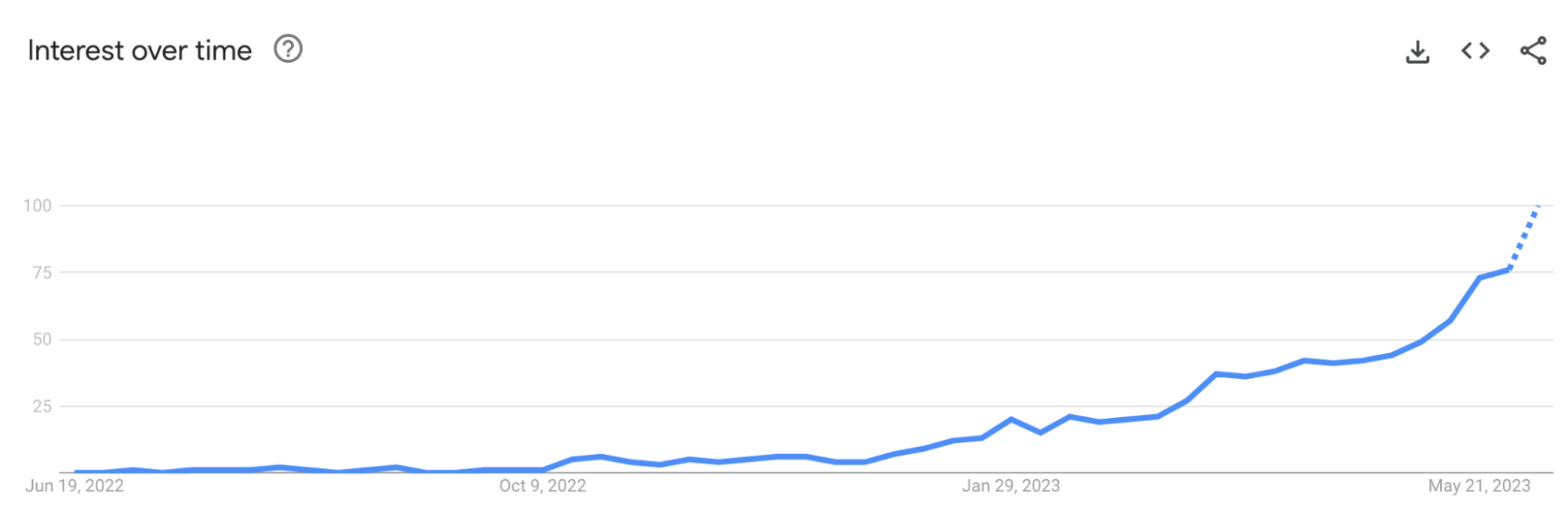

In the last year, we’ve seen a significant shift in general awareness about Generative AI. The chart below shows interest in the search term “Generative AI” on Google over the last year. A value of 100 is the peak popularity for the term, which we’ve started to creep towards in the last month or so. This search interest is driven by news stories and growing awareness of the different available use cases and how they might touch every part of our collective lives.

Between news coverage and growing Google search trends, you’d expect to see similar growth in user adoption or user sign-ups for Generative AI-powered services. That is, perhaps surprisingly, not the case. According to a Pew Research Center survey, while about six-in-ten U.S. adults (58%) are familiar with ChatGPT only 14% of US adults have tried the technology.

With all of the coverage and awareness around Generative AI, where are the users?

If you consume any media in the US, you’re likely aware of the HBO show Succession. It dominated social conversations and news stories in the weeks leading up to the series finale. Succession’s series finale drew a respectable 2.9 million viewers. A media analysis company that uses AI as a part of their analysis found that while Succession was covered six times more than any other show analyzed, it had the second-lowest average readership. Those who write about media for a living seemed to be more interested in the story of a family that owns a media conglomerate than the average viewer. Similarly, people who write about technology for a living are more likely to be interested in news about free Generative AI tools than the general population.

Add to that the reality that many of the news stories about ChatGPT focused on its potential to be used for harmful purposes. A Pew Research Center survey from 2021 found that Americans were more likely to express concerns than excitement about the increased use of artificial intelligence in daily life.

You have also likely seen many “gotchya” posts on social media showing the sometimes absurd responses to prompts, or the bevy of Generative AI-created images of people with three mouths. While those posts are fun and get a lot of attention, they make it harder for information about relevant and helpful use cases to bubble up to the top of the conversation about the technology.

With many viewing ChatGPT as the face of Generative AI, new users may have quickly gotten frustrated using a tool that was still in development. As anyone who designs or markets technologies knows, stickiness is key. A one-time use just to check things out does not always equate to ongoing usage and growth of active users.

Someone who has been promised the future and experiences the reality of a still-developing technology may lose trust and confidence.

It is on the technology companies working on Generative AI to build that consumer trust and confidence, which is required for user adoption.

How companies can build trust in Generative AI

Be transparent about how the technology works.

Consumers should be able to understand how Generative AI is used to generate content, and they should be able to trust that the results provided are accurate and unbiased. Tech companies should continue to invest in research and development to improve the accuracy and reliability of generative AI.

Provide clear and concise information about the limitations of the technology.

Generative AI is not perfect, and it can sometimes produce inaccurate or misleading results. It is important to be upfront about these limitations so that consumers can make informed decisions about how to use the technology.

Provide clear and concise privacy policies.

Consumers should know how their data is being used, and they should be able to trust that their data is being protected.

Be transparent about the risks of Generative AI.

This could include discussing the potential for the technology to be used for malicious purposes, such as creating deepfakes or spreading misinformation.

Use Generative AI to create positive experiences for consumers.

This could include using the technology to generate personalized content, provide customer support, or create new products and services.

Partner with trusted organizations.

This could include working with universities, research institutions, or government agencies to develop and use generative AI responsibly.

Encourage user feedback.

Tech companies should be open to user feedback about improving the accuracy and reliability of Generative AI.

By taking these steps, tech companies can ensure that Generative AI is used for good and that consumers can trust the technology enough to feel comfortable using it.